Pitch Spelling with Partitura¶

Have you always been bad at spelling bee, do you find that spelling notes makes this even worse. Your time of struggling is over…. Today we going to teach a Model to learn how to pitch spell for you.

Definition¶

Spelling a pitch relates to the system of naming notes by letters (A-G) and sharp(#) and flat (♭) signs - and sometimes double sharp and flat signs, resulting in names or 'spellings' like 'A♭', 'D#', 'F♭♭'.

Translating between frequencies in Hz and such names is non-trivial. You need to consider :

The 'concert pitch' you are taking as a reference

The temperament in which the piece is played

The overall key that the music would be notated in

Use of the correct enharmonic equivalents for accidentals (Using the correct enharmonic equivalent, Purpose of double-sharps and double-flats?)

If translating between, say, MIDI note numbers and 'spelled' names, the first two steps can be skipped.

Spelled pitch names often have an octave number appended for disambiguation - e.g. 'A♭3', 'D#5'.

Some Concrete Examples¶

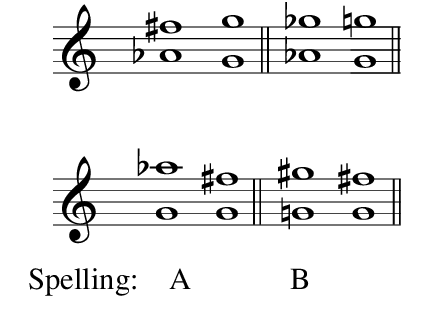

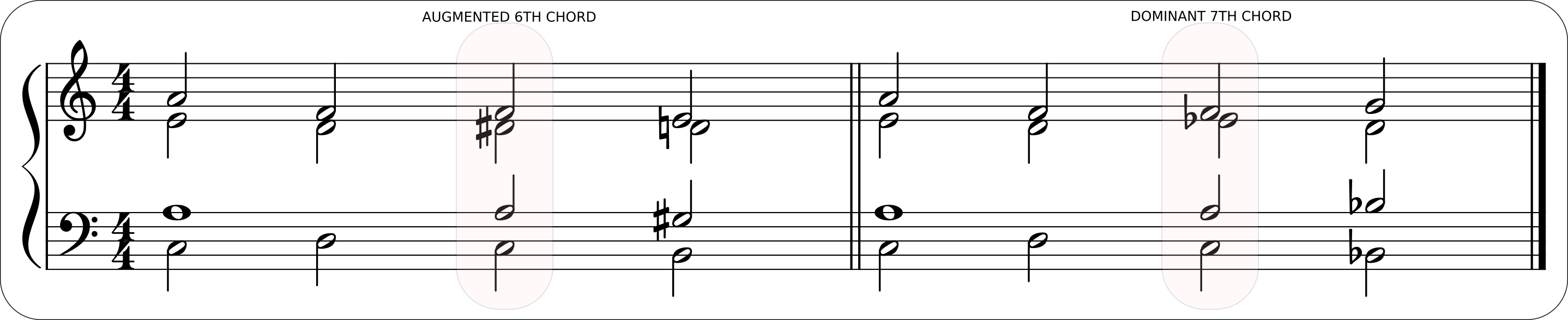

Different pitch spellings of the same content:

How to correctly spell a note may depend on the harmonic progression for example different spelling is appropriate for an Augmented 6th chord vs a borrowed dominant chord progression.

If music theory is not your cup of tea, do not worry. We will view Pitch Spelling as a task from a more engineering perpective.

Some Spelling algorithms¶

Partitura contains an implementation for a standard algorithm for Pitch Spelling. The algorithm in question is called ps13 created by Meredith and al.:

The ps13 pitch spelling algorithm, D Meredith - Journal of New Music Research, 2006

Some notable algorithms and current SOTA is PKSpell.

PKSpell: Data-driven pitch spelling and key signature estimation

F Foscarin, N Audebert, R Fournier-S'Niehotta, 2021

Let’s Get Started¶

In this tutorial we will use the following packages: - partitura The basic I/O for scores, performances and alignments crucial for pitch spelling estimation and evaluation. - Pytorch, i.e. torch Library for ML more on https://pytorch.org/ - pytorch_lightning Wrappers for Pytorch for better visualization and encapsulation more on https://www.pytorchlightning.ai/ - pandas for reading .tsv files

Let’s start by downloading ASAP a dataset containing note alignments of symbolic performances to their respective scores perfect for a Pitch-Spelling evaluation framework.

[1]:

try:

import google.colab

IN_COLAB = True

except:

IN_COLAB = False

if IN_COLAB:

# Issues on Colab with newer versions of MIDO

# this install should be removed after the following

# pull request is accepted in MIDO

# https://github.com/mido/mido/pull/584

! pip install mido==1.2.10

!pip install git+https://github.com/cpjku/partitura.git@develop

!pip install pytorch_lightning

[2]:

import partitura as pt

import torch

import torch.nn as nn

from torch.nn import functional as F

import numpy as np

import os

import tqdm

import pandas as pd

import pytorch_lightning as pl

from torch.utils.data import DataLoader, Dataset

import warnings

warnings.filterwarnings('ignore')

[3]:

if IN_COLAB:

if not os.path.exists("./asap-dataset"):

!git clone --single-branch https://github.com/CPJKU/asap-dataset.git

DATASET_DIR = os.path.normpath("./asap-dataset")

else:

import sys, os

sys.path.append(os.path.join(os.path.dirname(os.path.dirname(os.getcwd())), "utils"))

from load_data import init_dataset

DATASET_DIR = init_dataset(name="ASAP")

The ASAP Dataset with note alignments¶

ASAP is a dataset of aligned musical scores (both MIDI and MusicXML) and performances (audio and MIDI), all with downbeat, beat, note, time signature, and key signature annotations. ASAP is the the largest available fully note-aligned dataset to date (09/11/2022).

Content ASAP contains 236 distinct musical scores and 1067 performances of Western classical piano music from 15 different composers (see Table below for a breakdown).

Composer |

MIDI Performance |

Audio Performance |

MIDI/XML Score |

|---|---|---|---|

Bach |

169 |

152 |

59 |

Balakirev |

10 |

3 |

1 |

Beethoven |

271 |

120 |

57 |

Brahms |

1 |

0 |

1 |

Chopin |

289 |

108 |

34 |

Debussy |

3 |

3 |

2 |

Glinka |

2 |

2 |

1 |

Haydn |

44 |

16 |

11 |

Liszt |

121 |

48 |

16 |

Mozart |

16 |

5 |

6 |

Prokofiev |

8 |

0 |

1 |

Rachmaninoff |

8 |

4 |

4 |

Ravel |

22 |

0 |

4 |

Schubert |

62 |

44 |

13 |

Schumann |

28 |

7 |

10 |

Scriabin |

13 |

7 |

2 |

Total |

1067 |

519 |

222 |

Accesing information¶

Let’s get all the Bach files for this task. We select the .tsv note alignments, the MIDI performance file, the Musicxml Score File and the path for the match file we want to produce.

[4]:

# Selecting a subset of files from the dataset (Only Bach Files for this tutorial)

asap_files = [(os.path.join(root, file),

os.path.join(os.path.dirname(root), os.path.basename(root).split("_")[0]+".mid"),

os.path.join(os.path.dirname(root), "xml_score.musicxml"),

#os.path.join(root, os.path.splitext(file)[0]+".match"))

os.path.join(os.path.dirname(root), os.path.basename(root).split("_")[0]+".match"))

for root, dirs, files in os.walk(os.path.join(DATASET_DIR, "Bach"))

for file in files if file.endswith("note_alignment.tsv")]

For the Bach files in the ASAP dataset we will split on two subsets training and testing. For testing, we choose Bach’s Italian Concerto performances, and for training, we use Bach’s Preludes and Fugues we find in ASAP.

[5]:

_, _, score_files, match_files = zip(*asap_files)

asap_train = [t for t in zip(score_files, match_files) if "Italian_concerto" not in t[0]]

asap_test = [t for t in zip(score_files, match_files) if "Italian_concerto" in t[0]]

To train a pitch spelling model we will need some global description of pitches to perform tokenization.

Pitch Class |

Tonal Pitch Class |

|---|---|

11 |

B♮, C♭, A𝄪 |

10 |

B♭, A♯, C♭ |

9 |

A♮, G♭, B𝄫 |

8 |

A♭, G♯ |

7 |

G♮, F♭, A𝄫 |

6 |

F♯, G♭, E𝄪 |

5 |

F♮, E♯, G𝄫 |

4 |

E♮, F♭, D𝄪 |

3 |

D♯, E♭, F𝄫 |

2 |

D♮, C𝄪, E𝄫 |

1 |

C♯, D♭, B𝄪 |

0 |

C♮, B♯, D𝄫 |

Given this table we may characterize a note by a triplet:

So then a for example A4 = 440Hz would be: - Name = A , - Accidental = 0 or natural and - Octave 4

all together (A, 0, 4).

[6]:

PITCHES = {

0: ["C", "B#", "D--"],

1: ["C#", "B##", "D-"],

2: ["D", "C##", "E--"],

3: ["D#", "E-", "F--"],

4: ["E", "D##", "F-"],

5: ["F", "E#", "G--"],

6: ["F#", "E##", "G-"],

7: ["G", "F##", "A--"],

8: ["G#", "A-"],

9: ["A", "G##", "B--"],

10: ["A#", "B-", "C--"],

11: ["B", "A##", "C-"],

}

accepted_pitches = [ii for i in PITCHES.values() for ii in i]

pitch_to_ix = {p: accepted_pitches.index(p) for p in accepted_pitches}

To create Pitch Spelling data from the ASAP Dataset we will use the matched files of MIDI performances note aligned to scores that we produced earlier. We use the performance notes that have a match in the score that bear the label match. Then we obtain pairs of notes of type (performance note, score note). The encoded performance notes have pitch information in MIDI pitch, meaning integer values from 0-127 (no-pitch spelling) and duration in seconds. The score notes have pitch spelling

available in the form of the aforementioned triplet (note_name, accidental, octave).

Therefore, the steps we need to follow is to expand the performance notes to features and to tokenize the score’s pitch spelling.

For the performance notes we use a 14 length vector that contains: - for the first 12 values a One Hot representation of Pitch Class extracted from the MIDI pitch, followed by - a normalization of midi pitch between 0 and 1, and finally - a duration normalized by minute.

The tokenization of the score notes follows the previous table of the available spellings. Therefore, the pitch spelling task translates to a per note classification task with 35 target classes. Let’s create our features and labels.

[7]:

def tokenize_pitch_spelling(ps_note):

# step = {"A": 0, "B": 1, "C": 2, "D": 3, "E": 4, "F": 5, "G": 6}[]

alter = {0:"", 1:"#", 2:"##", -1:"-", -2:"--"}[ps_note["alter"].item()]

return pitch_to_ix[ps_note["step"].item()+alter]

def extract_features(perf_note):

features = np.zeros((14,))

# One hot of Pitch Class for first 12 entries

features[int(perf_note["pitch"].item()%12)] = 1

# pitch as float

features[12] = perf_note["pitch"].item()/127

# duration normalized per minute

features[13] = perf_note["duration_sec"].item() / 60

return features

def create_data(files):

data, labels = list(), list()

for score_file, match_file in tqdm.tqdm(files):

performance, alignment = pt.load_match(match_file)

score = pt.load_score(score_file)

spart = pt.score.merge_parts(score)

spart = pt.score.unfold_part_maximal(spart, ignore_leaps=False)

matched_notes = [alignment[idx] for idx, d in enumerate(alignment) if d["label"] == "match"]

pna = performance.note_array()

sna = spart.note_array(include_pitch_spelling=True)

X, y = np.zeros((len(matched_notes), 14), dtype=float), np.zeros((len(matched_notes), ), dtype=int)

for idx, match_note in enumerate(matched_notes):

X[idx] = extract_features(pna[np.where(pna["id"] == str(match_note["performance_id"]))])

y[idx] = tokenize_pitch_spelling(sna[np.where(sna["id"] == match_note["score_id"])][["step", "alter", "octave"]])

data.append(X)

labels.append(y)

return data, labels

[8]:

X_train, y_train = create_data(asap_train)

X_test, y_test = create_data(asap_test)

100%|█████████████████████████████████████████████████████████████████████████████████████████████████| 166/166 [01:41<00:00, 1.64it/s]

100%|█████████████████████████████████████████████████████████████████████████████████████████████████████| 3/3 [00:02<00:00, 1.17it/s]

Model¶

In this section we will define a Pitch Spelling model heavily inspired by the PKSpell model by F. Foscarin. It’s a sequential model with an LSTM layer followed by a Linear projection layer. The performance notes are actually sequential since MIDI messages of performances are sequential, counter to the hierarchical representation of the score. Please keep note, that using MIDI performances was not implemented in the original PKSpell and it is only possible to be integrated easily into the model thanks to the partitura package.

[28]:

class PKSpell(nn.Module):

"""Models that decouples key signature estimation from pitch spelling by adding a second RNN.

This model reached state of the art performances for pitch spelling.

"""

def __init__(

self,

input_dim=14,

hidden_dim=100,

pitch_to_ix=pitch_to_ix,

hidden_dim2=24,

rnn_depth=1,

dropout=0.1,

bidirectional=True

):

super(PKSpell, self).__init__()

self.dropout = nn.Dropout(dropout)

self.n_out_pitch = len(pitch_to_ix)

self.hidden_dim = hidden_dim

self.hidden_dim2 = hidden_dim2

# RNN layer.

self.rnn = nn.LSTM(

input_size=input_dim,

hidden_size=hidden_dim // 2 if bidirectional else hidden_dim,

bidirectional=bidirectional,

num_layers=rnn_depth,

)

# Output layers.

self.top_layer_pitch = nn.Linear(hidden_dim, self.n_out_pitch)

# Loss function that we will use during training.

self.loss_pitch = nn.CrossEntropyLoss()

def compute_outputs(self, sentences, sentences_len):

rnn_out, _ = self.rnn(sentences)

rnn_out = self.dropout(rnn_out)

out_pitch = self.top_layer_pitch(rnn_out)

return out_pitch

def forward(self, sentences, pitches, sentences_len):

# First computes the predictions, and then the loss function.

# Compute the outputs. The shape is (max_len, n_sentences, n_labels).

scores_pitch = self.compute_outputs(sentences, sentences_len)

# Flatten the outputs and the gold-standard labels, to compute the loss.

# The input to this loss needs to be one 2-dimensional and one 1-dimensional tensor.

scores_pitch = scores_pitch.view(-1, self.n_out_pitch)

loss = self.loss_pitch(scores_pitch, pitches)

acc = (scores_pitch.argmax(dim=-1) == pitches).float().mean()

return loss, acc

def predict(self, ppart):

# Compute the outputs from the linear units.

pna = ppart.note_array()

features = np.zeros((len(pna),14))

features[np.arange(len(pna)), np.remainder(pna["pitch"], 12)] = 1

features[:, 12] = pna["pitch"]/127

features[:, 13] = pna["duration_sec"] / 60

scores_pitch = self.compute_outputs(torch.tensor([features]).float(), [len(features)])

# Select the top-scoring labels.

predicted_pitch = scores_pitch.argmax(dim=2).squeeze()

spelling_array = [(accepted_pitches[pp][0], {"":0, "#":1, "##":2, "-":-1, "--":-2}[accepted_pitches[pp][1:]], int(pna[i]["pitch"].item()/12)-1)for i, pp in enumerate(predicted_pitch)]

out = np.array(spelling_array, dtype=[('step', '<U1'), ('alter', '<i8'), ('octave', '<i8')])

return out

We will also introduce some Pytorch and Pytorch-Lightning wrappers for the Dataset and the Model.

[29]:

class PSDataset(Dataset):

def __init__(self, x, y):

super(PSDataset, self).__init__()

self.x = x

self.y = y

def __getitem__(self, idx):

return torch.tensor(self.x[idx]), torch.tensor(self.y[idx]).type(torch.LongTensor)

def __len__(self):

return len(self.x)

def collate_ps(data):

def merge(sequences):

lengths = [len(seq) for seq in sequences]

padded_seqs = torch.zeros(len(sequences), max(lengths)).long()

for i, seq in enumerate(sequences):

end = lengths[i]

padded_seqs[i, :end] = seq[:end]

return sequences, lengths

# sort a list by sequence length (descending order) to use pack_padded_sequence

data.sort(key=lambda x: len(x[0]), reverse=True)

# seperate source and target sequences

src_seqs, trg_seqs = zip(*data)

# merge sequences (from tuple of 1D tensor to 2D tensor)

# src_seqs, src_lengths = merge(src_seqs)

# trg_seqs, trg_lengths = merge(trg_seqs)

src_lengths = [len(seq) for seq in src_seqs]

return src_seqs[0].float(), src_lengths, trg_seqs[0]

class PKSpellPL(pl.LightningModule):

def __init__(self):

super(PKSpellPL, self).__init__()

self.module = PKSpell()

def training_step(self, batch, batch_idx):

src_seqs, src_lengths, trg_seqs = batch

loss, acc = self.module(src_seqs, trg_seqs, src_lengths)

self.log("train_loss", loss.item(), on_epoch=True, on_step=True, prog_bar=True)

self.log("train_acc", acc.item(), on_epoch=True, on_step=True, prog_bar=True)

return loss

def validation_step(self, batch, batch_idx):

src_seqs, src_lengths, trg_seqs = batch

loss, acc = self.module(src_seqs, trg_seqs, src_lengths)

self.log("val_loss", loss.item(), on_epoch=True, prog_bar=True)

self.log("val_acc", acc.item(), on_epoch=True, on_step=True, prog_bar=True)

return loss

def configure_optimizers(self):

optimizer = torch.optim.Adam(self.parameters(), lr=0.001, weight_decay=5e-4)

return {

"optimizer": optimizer,

}

def eval_matched(score_file, alignment, performance):

# Load the score and Unfold any repetitions.

score = pt.score.unfold_part_maximal(pt.score.merge_parts(pt.load_score(score_file)), ignore_leaps=False)

sna = score.note_array(include_pitch_spelling=True)

matched_notes = [alignment[idx] for idx, d in enumerate(alignment) if d["label"] == "match"]

score_idxs = list()

matched= np.zeros((len(performance.note_array()), ))

for i, perf_note in enumerate(performance.note_array()):

for match_note in matched_notes:

if match_note["performance_id"] == perf_note["id"]:

score_idxs.append(np.where(sna["id"] == match_note["score_id"])[0].item())

matched[i] = 1

break

score_idxs = np.array(score_idxs)

true_spelling = sna[score_idxs][["step", "alter", "octave"]]

return true_spelling, matched

Train the PKSpell model¶

For training we use Pytorch Lightning Trainer witch includes a training progress visualization and logging of the metrics (Train Loss, Train Accuracy, Validation Loss, and Validation Accuracy).

For this tutorial we keep the training and the model simple which only trainable with a batch of size 1. For more elaborate implementation, please visit the original PKSpell repo.

[30]:

model = PKSpellPL()

train_dataloader = DataLoader(PSDataset(X_train, y_train), collate_fn=collate_ps, batch_size=1)

val_dataloader = DataLoader(PSDataset(X_test, y_test), collate_fn=collate_ps, batch_size=1)

trainer = pl.Trainer(max_epochs=5)

GPU available: True (cuda), used: True

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

[31]:

trainer.fit(model, train_dataloaders=train_dataloader, val_dataloaders=val_dataloader)

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0]

| Name | Type | Params

-----------------------------------

0 | module | PKSpell | 29.9 K

-----------------------------------

29.9 K Trainable params

0 Non-trainable params

29.9 K Total params

0.120 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=5` reached.

Using the Model for prediction.¶

Let’s see how we can use our trained PKSpell Model for prediction.

For prediction we only need to provide a midi file and call our model.predict function.

[32]:

# You can input the path to your own MIDI file

MIDI_FILE = asap_files[0][1]

# Load the MIDI File to the performance Object using Partitura

performance = pt.load_performance_midi(MIDI_FILE)

# Remove the module from Lightning to produce single file results.

with torch.no_grad():

pk_spelling = model.module.predict(performance)

[33]:

df = pd.DataFrame(pk_spelling)

df.head()

[33]:

| step | alter | octave | |

|---|---|---|---|

| 0 | C | 0 | 4 |

| 1 | D | 0 | 4 |

| 2 | E | 0 | 4 |

| 3 | F | 0 | 4 |

| 4 | G | 0 | 4 |

In partitura is easy to estimate spelling using the build-in method (PS13 algorithm)

[34]:

partitura_spelling = pt.musicanalysis.estimate_spelling(performance)

df = pd.DataFrame(partitura_spelling)

df.head()

[34]:

| step | alter | octave | |

|---|---|---|---|

| 0 | C | 0 | 4 |

| 1 | D | 0 | 4 |

| 2 | E | 0 | 4 |

| 3 | F | 0 | 4 |

| 4 | G | 0 | 4 |

We can use the same pipeline to compare the spelling of our trained PKSpell model to compare it with the Build-In Partitura Spelling estimation and to the ground truth but for this we will use a match file.

[35]:

# Get a score and a match file

score_file, match_file = asap_test[2]

# Load the Match file

performance, alignment = pt.load_match(match_file)

# Estimate Spelling using the Partitura Music Analysis PS13 algorithm.

baseline_spelling = pt.musicanalysis.estimate_spelling(performance)

# Obtain the prediction using PKSpell

with torch.no_grad():

pk_spelling = model.module.predict(performance)

Obtain the Ground Truth from the score

[36]:

true_spelling, matched = eval_matched(score_file, alignment, performance)

pk_spelling = pk_spelling[matched.astype(bool)]

baseline_spelling = baseline_spelling[matched.astype(bool)]

[37]:

acc_pk = np.all([pk_spelling[key] == true_spelling[key] for key in pk_spelling.dtype.names], axis=0).astype(float).mean().item()

acc_ps13 = np.all([baseline_spelling[key] == true_spelling[key] for key in baseline_spelling.dtype.names], axis=0).astype(float).mean().item()

print("Accuracy PkSpell: {:.3f} | Accuracy Partitura PS13 {:.3f}".format(acc_pk, acc_ps13))

Accuracy PkSpell: 0.989 | Accuracy Partitura PS13 1.000

In this tutorial, we saw how to train a model for Pitch Spelling. The pitch spelling model achieves comparable accuracy to the Baseline model implemented in partitura. Nevertheless, we only used a small amount of data to train it. Using more data, will improve the performance.

Remember, with more data comes more spelling power, and with more spelling power comes more responsibility. So, spell carefully.

[ ]: